PiAv is an audio-visual installation that explores sonic and visual improvisation by exploiting glitches, loss of network connections, and random abnormalities created through pushing the limits of a network of microcomputers. A closed network of ten microcomputers creates a cross-pollinated audio-visual system where each node (one microcomputer) of sound or video is influenced by its’ connection or lack of connection to the other nodes in the network. There are eight sound nodes and two video nodes. In addition to being influenced by stochastic processes created from its’ networked status, each node is independently driven by its’ own set of stochastic instructions. The combination of influences creates an ever-changing audio-visual experience. In terms of sonic and visual interaction, combinations of pitch-clusters and samples from each sound node influence the two video nodes. The video nodes generate abstract visual images created from computer code inspired by traditional analog video feedback techniques. In return, the color spectra and motion velocity within the visual images influence the sound processing on each of the eight sound nodes creating a meta-feedback system. The total audio-visual system cycles through a series of meta-scenes that vary from drone-like, contemplative clusters to riotous bursts of sound and energy. The meta-scenes provide a foundation for the basic states of sounds and and images while the limitations of each micro-computer’s processor and it’s state of connection to the network allow for the unexpected glitch.

PiAv is an audio-visual installation that explores sonic and visual improvisation by exploiting glitches, loss of network connections, and random abnormalities created through pushing the limits of a network of microcomputers. A closed network of ten microcomputers creates a cross-pollinated audio-visual system where each node (one microcomputer) of sound or video is influenced by its’ connection or lack of connection to the other nodes in the network. There are eight sound nodes and two video nodes. In addition to being influenced by stochastic processes created from its’ networked status, each node is independently driven by its’ own set of stochastic instructions. The combination of influences creates an ever-changing audio-visual experience. In terms of sonic and visual interaction, combinations of pitch-clusters and samples from each sound node influence the two video nodes. The video nodes generate abstract visual images created from computer code inspired by traditional analog video feedback techniques. In return, the color spectra and motion velocity within the visual images influence the sound processing on each of the eight sound nodes creating a meta-feedback system. The total audio-visual system cycles through a series of meta-scenes that vary from drone-like, contemplative clusters to riotous bursts of sound and energy. The meta-scenes provide a foundation for the basic states of sounds and and images while the limitations of each micro-computer’s processor and it’s state of connection to the network allow for the unexpected glitch.

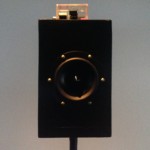

PiAv uses ten networked Raspberry Pi computers. Eight custom-made speakers with embedded Raspberry Pi computers run networked Pure Data patches to provide a continually evolving soundscape. The video images are created using two Raspberry Pi camera modules, basic analog video feedback techniques, and custom Python scripts for video processing. A laptop running Max/MSP serves as a central messaging hub for the total system.